Hui Bai, Ran Cheng*, Yaochu Jin

Abstract:

Reinforcement learning (RL) is a machine learning approach that trains agents to maximize cumulative rewards through interactions with environments. The integration of RL with deep learning has recently resulted in impressive achievements in a wide range of challenging tasks,including board games, arcade games, and robot control. Despite these successes, several critical challenges remain, such as brittle convergence properties caused by sensitive hyperparameters, difficulties in temporal credit assignment with long time horizons and sparse rewards, a lack of diverse exploration, particularly in continuous search space scenarios, challenges in credit assignment in multi-agent reinforcement learning, and conflicting objectives for rewards. Evolutionary computation (EC), which maintains a population of learning agents, has demonstrated promising performance in addressing these limitations. This article presents a comprehensive survey of state-of-the-art methods for integrating EC into RL, referred to as evolutionary reinforcement learning (EvoRL). We categorize EvoRL methods according to key research areas in RL, including hyperparameter optimization, policy search, exploration, reward shaping, meta-RL, and multi-objective RL. We then discuss future research directions in terms of efficient methods, benchmarks, and scalable platforms. This survey serves as a resource for researchers and practitioners interested in the field of EvoRL, highlighting the important challenges and opportunities for future research. With the help of this survey, researchers and practitioners can develop more efficient methods and tailored benchmarks for EvoRL, further advancing this promising cross-disciplinary research field.

Key Research Field of Evolutionary Reinforcement Learning

Evolutionary computation (EC) refer to a family of stochastic search algorithms that have been developed based on the principle of natural evolution. The primary objective of EC is to approximate global optima of optimization problems by iteratively performing a range of mechanisms, such as variation (i.e., crossover and mutation), evaluation, and selection. Among various EC paradigms, the Evolution Strategies (ESs) are the mostly adopted in EvoRL, together with the classic Genetic Algorithm (GAs) and the Genetic Programming (GP).

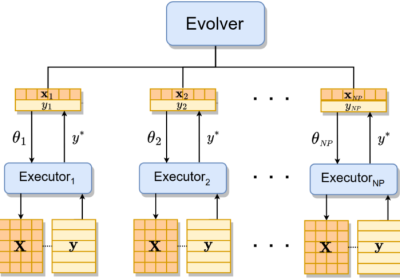

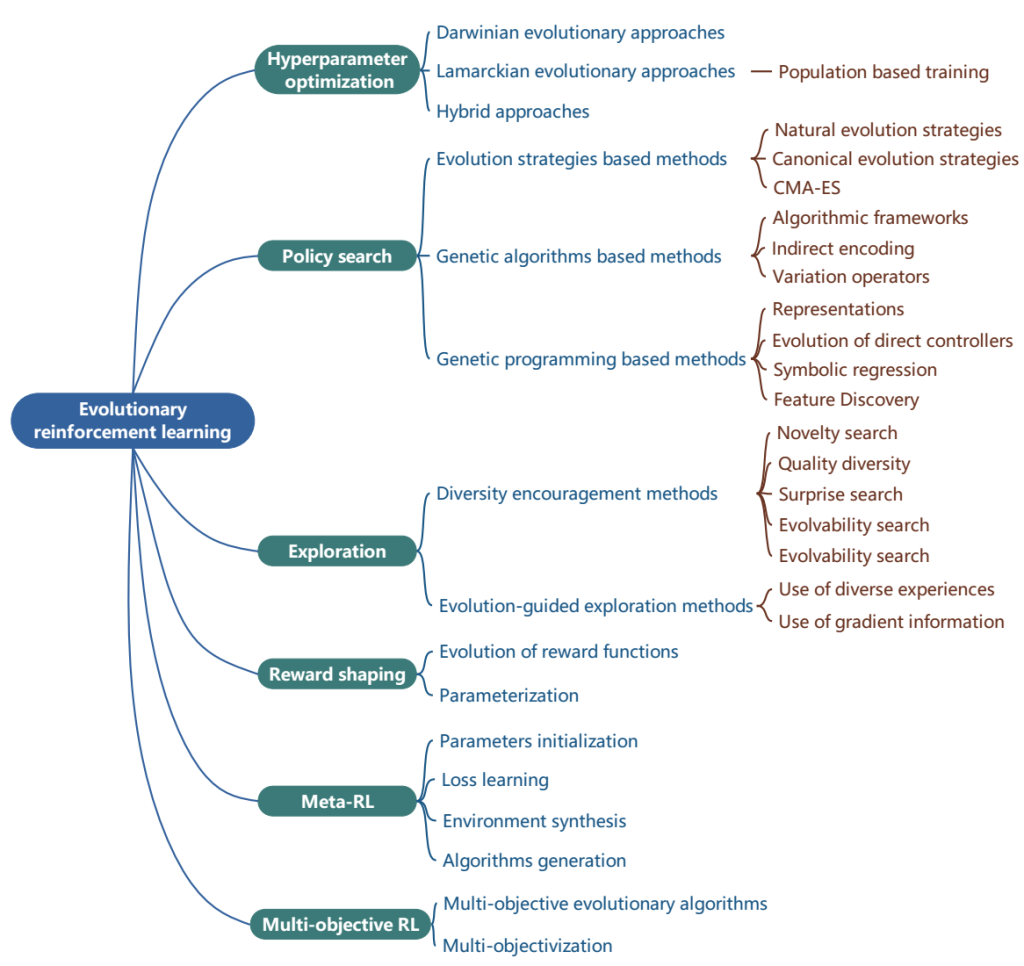

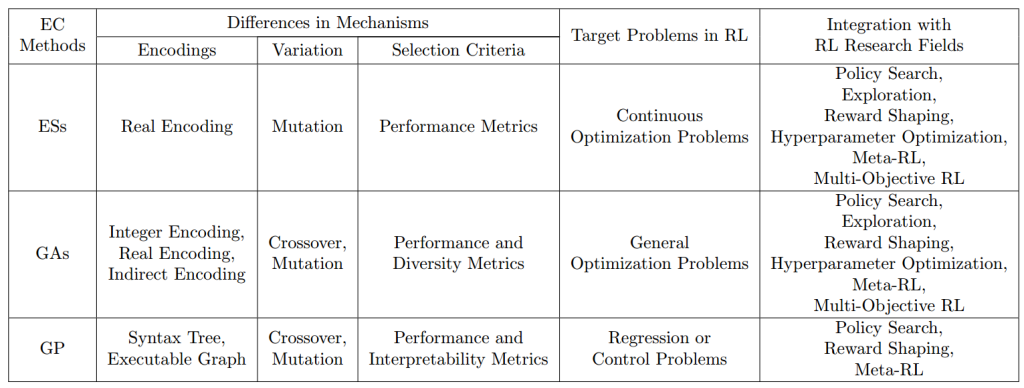

Specifically, EC has been introduced into RL in six major key research fields of RL, including hyperparameter optimization, policy search, exploration, reward shaping, meta-RL, and multi-objective RL, as presented in Figure 1. Since the three EC methods (i.e., ESs, GAs, GP) have differences in mechanisms, their target problems and focused applications to the six RL research fields are slightly different. The detailed comparisons are listed in Table 1. ESs employ real encoding and simulate gradient-based methods, and thus are especially applied to continuous optimization problems with a large number of decision variables (e.g., neural networks-based policy search). GAs adopt various kinds of encoding methods and involve diversity metrics, and therefore can be applied to various optimization problems in RL research fields. GP designs tree-based or graph-based encodings to generate interpretable and programmatic solutions and is mainly applied to regression problems or direct control problems.

Figure 1: Key research fields of evolutionary reinforcement learning. Hyperparameter optimization is a universal method for algorithms in the other five research fields to realize end-to-end learning and improve performance simultaneously. Policy search seeks to identify a policy that maximizes the cumulative reward for a given task. Exploration encourages agents to explore more states and actions and trains robust agents to better respond to dynamic changes in environments. Reward shaping is aimed at enhancing the original reward with additional shaping rewards for tasks with sparse rewards. Meta-RL seeks to develop a general-purpose learning algorithm that can adapt to different tasks. Multi-objective RL aims to obtain trade-off agents in tasks with a number of conflicting objectives.

Table 1: Comparisons of three types of EC paradigms (i.e., ESs, GAs, and GP) in terms of the differences in mechanisms, target problems in RL, and integration with RL research fields.

Future Research Directions

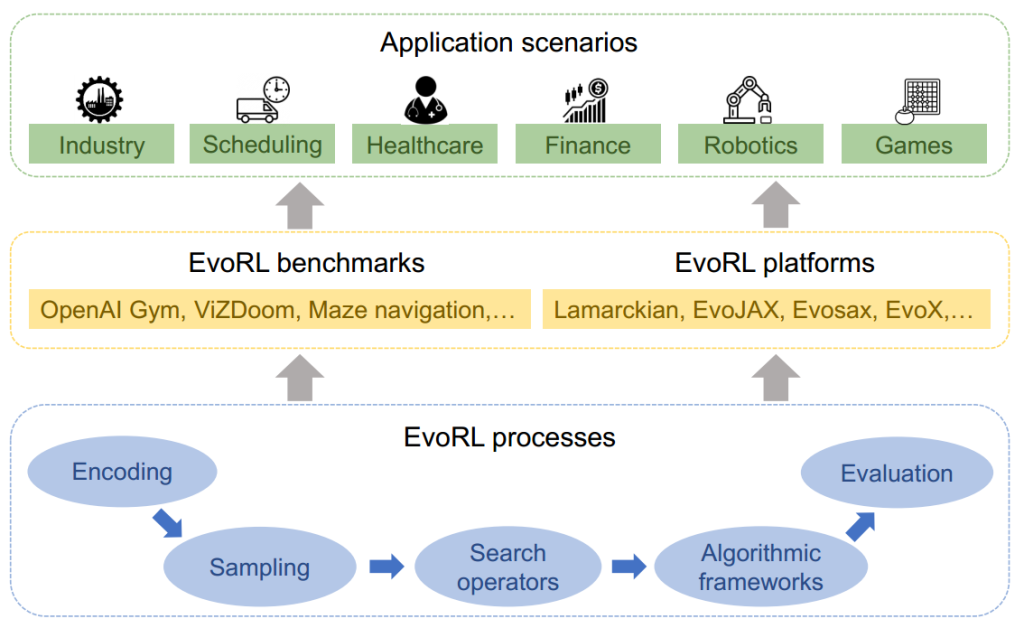

Although EvoRL has been successfully applied to large-scale complex RL tasks, even with sparse and deceptive rewards, it is still computationally expensive. A number of efficient methods in terms of EvoRL processes including encoding, sampling, search operators, algorithmic framework, and evaluation, as well as benchmarks, platforms, and applications, are desirable research directions, as overviewed in Figure 2.

Figure 2: Overview of future directions from four fields of EvoRL: processes, benchmarks, platforms, and application scenarios.

Citation:

@article{

title={Evolutionary Reinforcement Learning: A Survey},

author={Bai, Hui and Cheng, Ran and Jin, Yaochu},

journal={Intelligent Computing},

year={2023},

publisher={AAAS}

}

![[Intelligent Computing] Evolutionary Reinforcement Learning: A Survey](https://www.emigroup.tech/wp-content/uploads/2023/04/未命名的设计-840x420.png)