Teng Wang, Yixiao Ge, Feng Zheng, Ran Cheng, Ying Shan, Xiaohu Qie, Ping Luo

Abstract:

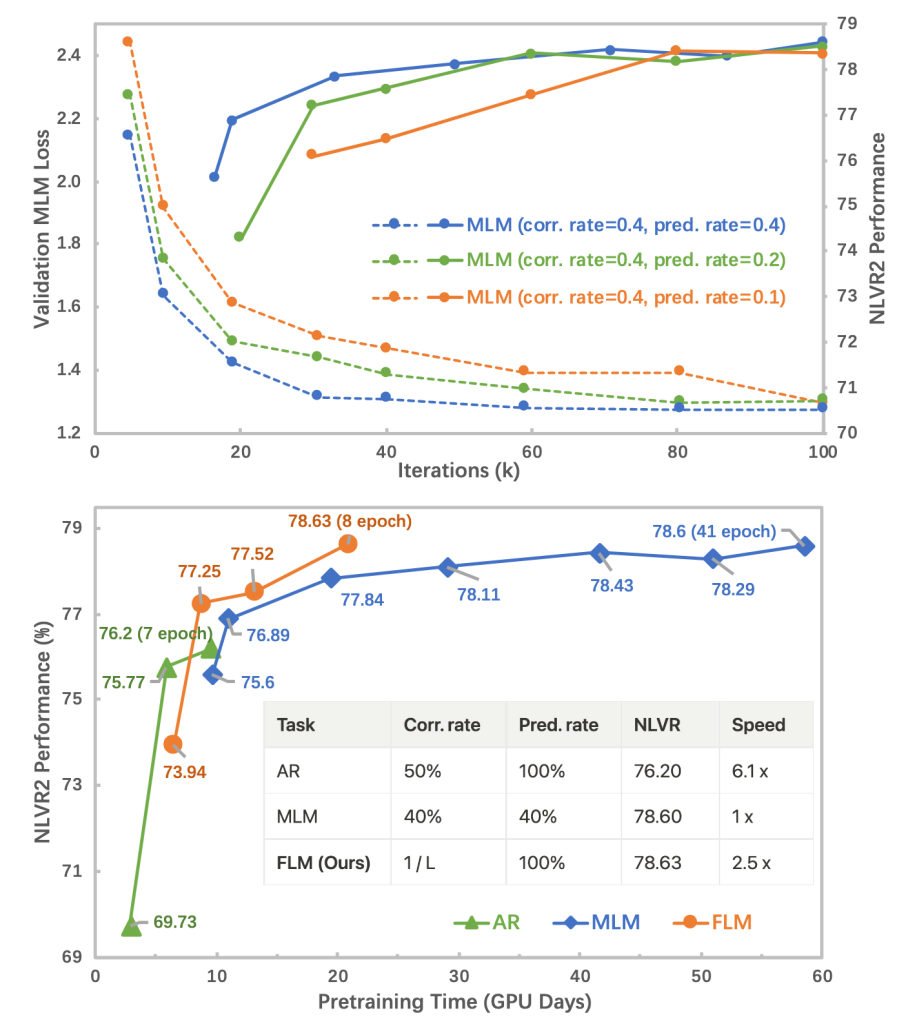

The state of the arts in vision-language pretraining (VLP) achieves exemplary performance but suffers from high training costs resulting from slow convergence and long training time, especially on large-scale web datasets. An essential obstacle to training efficiency lies in the entangled prediction rate (percentage of tokens for reconstruction) and corruption rate (percentage of corrupted tokens) in masked language modeling (MLM), that is, a proper corruption rate is achieved at the cost of a large portion of output tokens being excluded from prediction loss. To accelerate the convergence of VLP, we propose a new pretraining task, namely, free language modeling (FLM), that enables a 100% prediction rate with arbitrary corruption rates. FLM successfully frees the prediction rate from the tie-up with the corruption rate while allowing the corruption spans to be customized for each token to be predicted. FLM-trained models are encouraged to learn better and faster given the same GPU time by exploiting bidirectional contexts more flexibly. Extensive experiments show FLM could achieve an impressive 2.5x pretraining time reduction in comparison to the MLM-based methods, while keeping competitive performance on both vision-language understanding and generation tasks. Code will be public at https://github.com/TencentARC/FLM.

Results:

Comparison with different language modeling methods

Figure 1. (a) Large prediction rate accelerates training.

Comparison with different language modeling methods

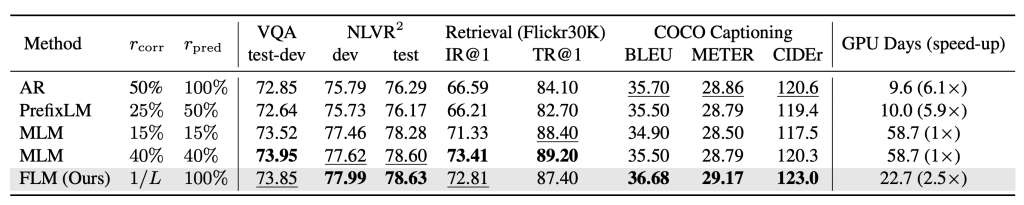

Table 1. Performance Comparison between different language modeling methods. r_corr and r_pred refer to the corruption and prediction rates.

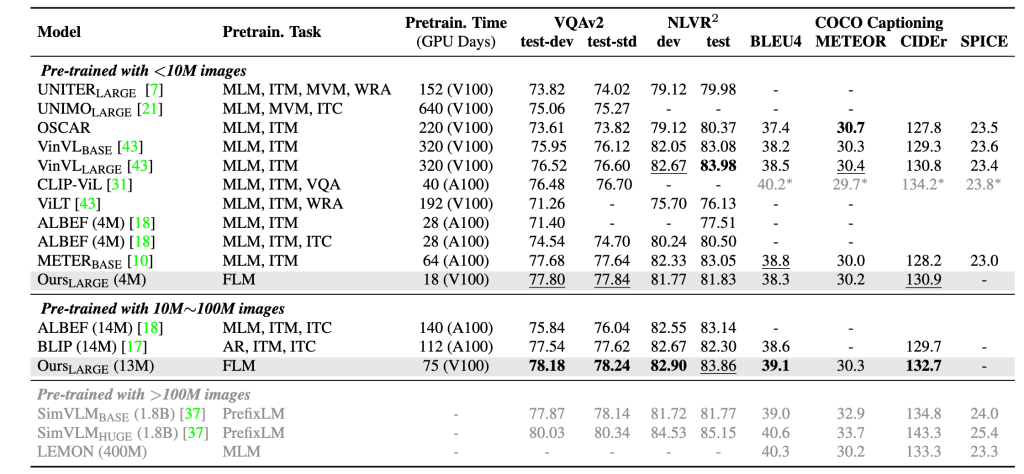

Comparison on VQA, reasoning, and captioning tasks

Table 2. Comparisons with models on visual question answering, visual reasoning, and image captioning tasks.

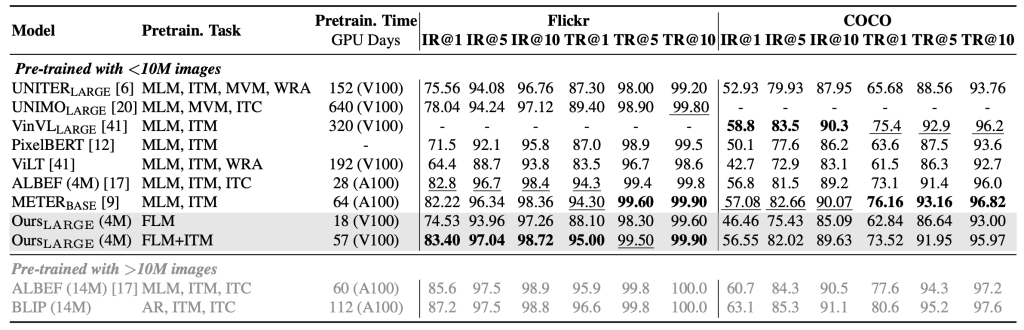

Comparison on image-text retrieval tasks

Table 3. Performance comparisons with models pre-trained on Flickr30k and COCO image retrieval (IR) and text retrieval (TR) tasks.

Citation:

Please cite our work if you find this helps your research.

@ARTICLE{wang2022acc,

title={ Accelerating Vision-Language Pretraining with Free Language Modeling},

author={Wang, Teng and Ge, Yixiao and Zheng, Feng and Cheng, Ran and Shan, Ying and Qie, Xiaohu and Luo, Ping},

journal={arXiv preprint},

year={2022}}

![[CVPR 2023] Accelerating Vision-Language Pretraining with Free Language Modeling](https://www.emigroup.tech/wp-content/uploads/2023/03/FLM封面-840x420.png)