Zhichao Lu, Ran Cheng, Shihua Huang, Haoming Zhang, Changxiao Qiu, and Fan Yang

Abstract:

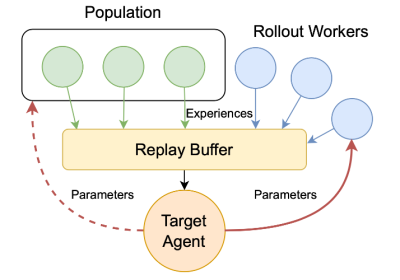

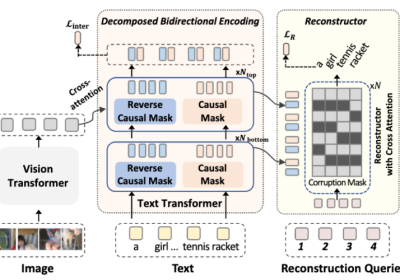

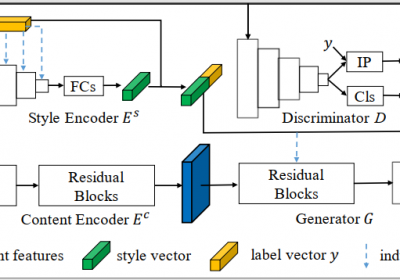

The architectural advancements in deep neural networks have led to remarkable leap-forwards across a broad array of computer vision tasks. Instead of relying on human expertise, neural architecture search (NAS) has emerged as a promising avenue towards automating the design of architectures. While recent achievements on image classification have suggested opportunities, the promises of NAS have yet to be thoroughly assessed on more challenging tasks of semantic segmentation. The main challenges of applying NAS to semantic segmentation arise from two aspects: i) high-resolution images to be processed; ii) additional requirement of real-time inference speed (i.e. real-time semantic segmentation) for applications such as autonomous driving. To meet such challenges, we propose a surrogate-assisted multi-objective method in this paper. Through a series of customized prediction models, our method effectively transforms the original NAS task to an ordinary multi-objective optimization problem. Followed by a hierarchical pre-screening criterion for in-fill selection, our method progressively achieves a set of efficient architectures trading-off between segmentation accuracy and inference speed. Empirical evaluations on three benchmark datasets together with an application using Huawei Atlas 200 DK suggest that our method can identify architectures significantly outperforming existing state-of-the-art architectures designed both manually by human experts and automatically by other NAS methods.

[Github: https://github.com/mikelzc1990/nas-semantic-segmentation]

Results:

Performance:

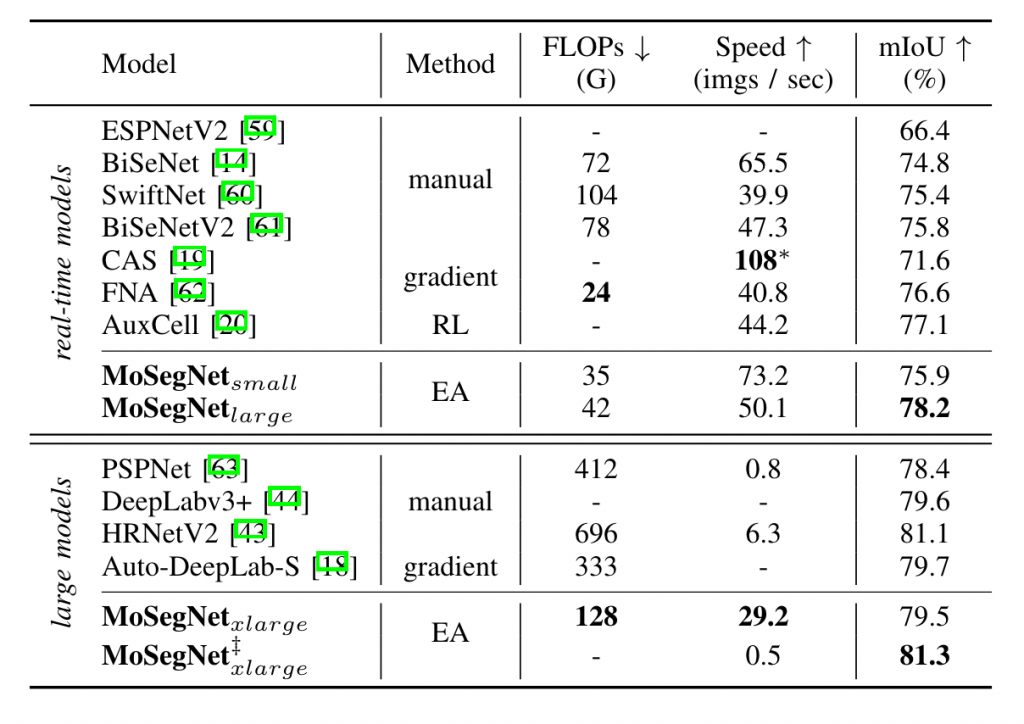

Table 1. Comparison with state-of-the-art models on Cityscapes. Models are grouped into sections and the best result in each section is in bold. “RL” stands for reinforcement learning. ∗ denotes the use of a high-performance inference framework TensorRT to measure speed. ‡ is an ensemble of multiple input scales.

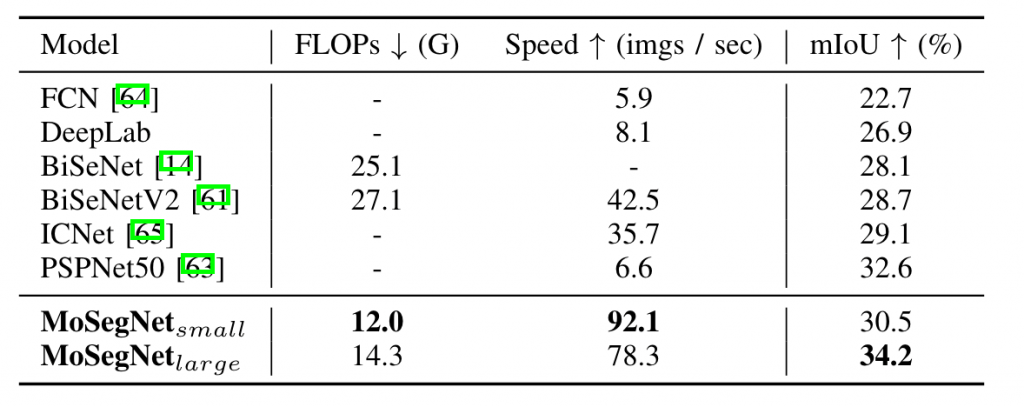

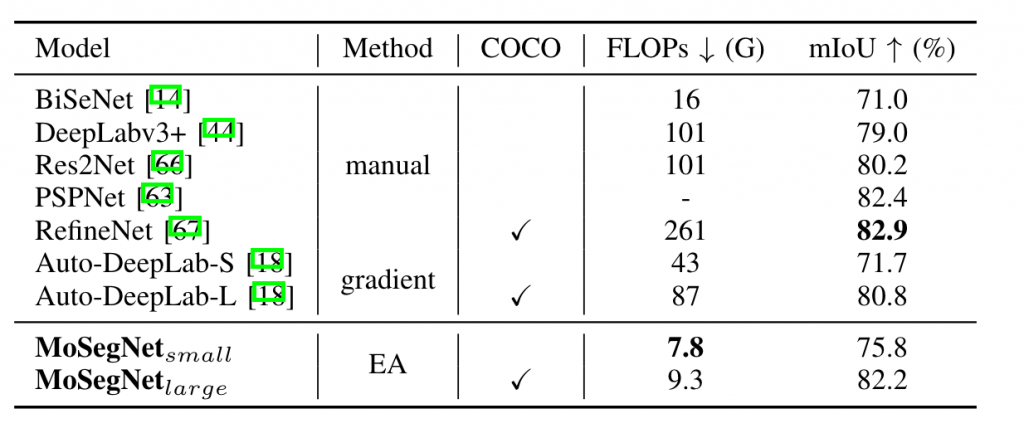

Table 2. Performance comparison on COCO-Stuff-10K. Models are evaluated on network complexity (FLOPs), inference speed, and accuracy (mIoU). The best result in each section is in bold.

Table 3. Performance comparison on PASCAL VOC 2012. Models are evaluated on network complexity (FLOPs) and accuracy (mIoU). “COCO” indicates additional pretraining on MS COCO dataset. The best result in each section is in bold.

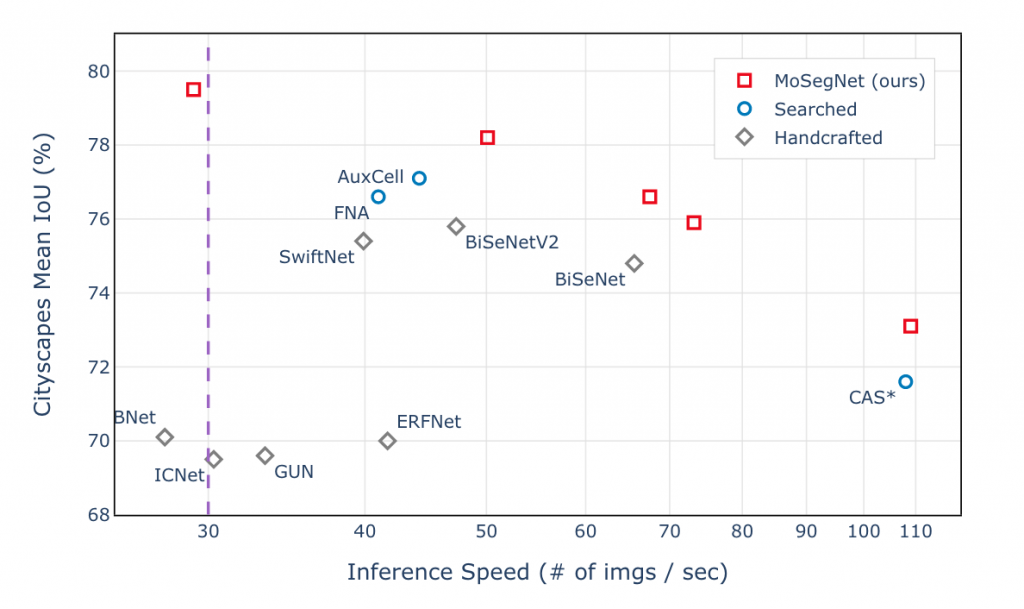

Fig. 1. Speed-Accuracy trade-off comparison with other real-time models on Cityscapes. Models with an inference speed of more than 30 images per second (purple line) are considered to be real-time. ∗ uses a high-performance inference framework TensorRT to measure speed. See Table I for more comparisons to large models (non-real-time).

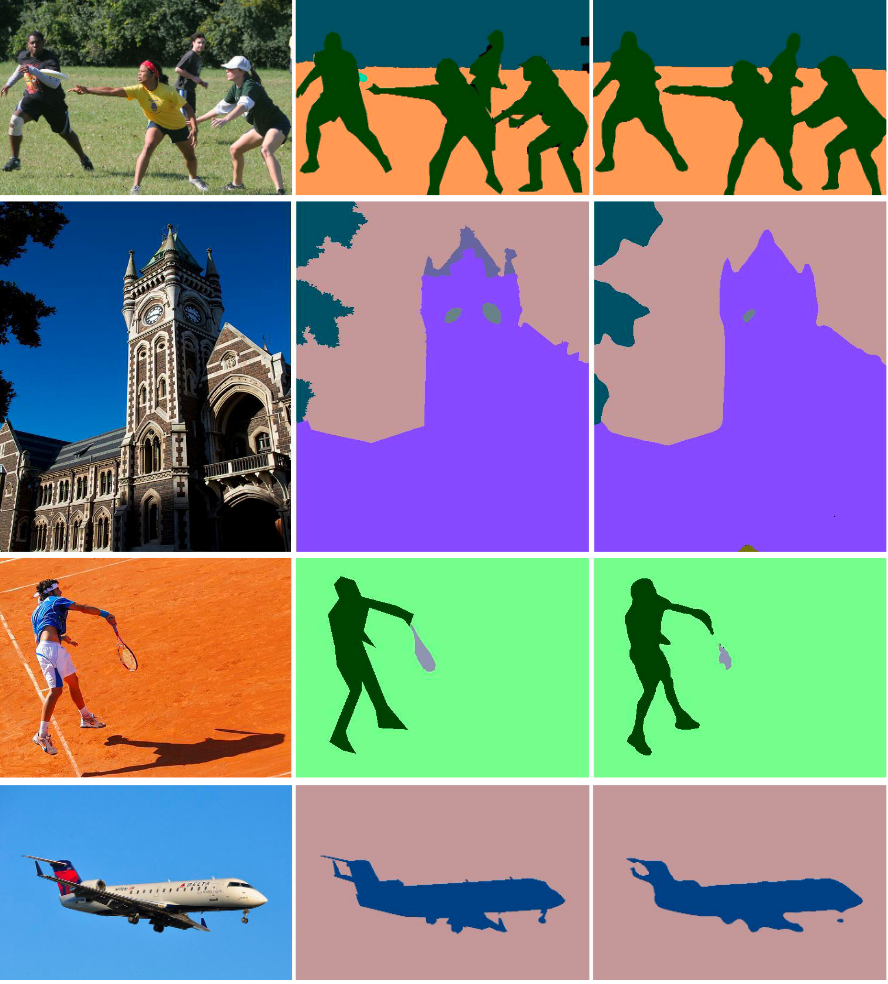

Fig. 2. Visual Comparison on COCO-Stuff. Example pairs visualizing the input image (left), the ground truth (middle), and the predictions from the proposed MoSegNet (right).

Use case:

Fig. 3. Visual Comparison on COCO-Stuff. Example pairs visualizing the input image (left), the ground truth (middle), and the predictions from the proposed MoSegNet (right).

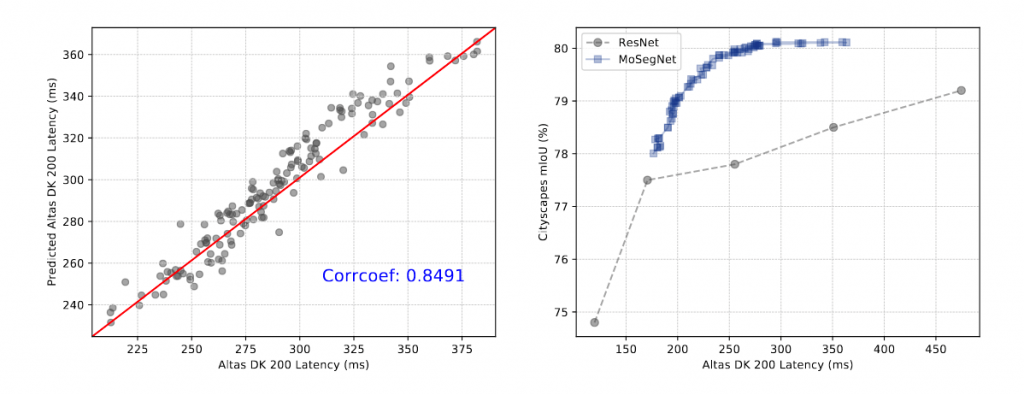

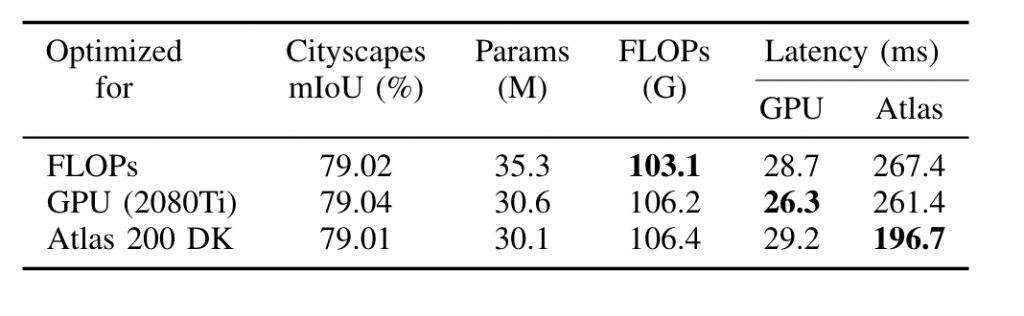

Table 4. Computational efficiency comparison among MoSegNets optimized for FLOPs and latency on RTX 2080Ti and Huawei Atlas 200 DK.

Acknowledgements:

This work was supported by the National Natural Science Foundation of China (No. 62106097, 61906081), China Postdoctoral Science Foundation (No. 2021M691424), and the Guangdong Provincial Key Laboratory (No. 2020B121201001).

Citation

@ARTICLE{

author={Lu, Zhichao and Cheng, Ran and Huang, Shihua and Zhang, Haoming and Qiu, Changxiao and Yang, Fan},

journal={IEEE Transactions on Artificial Intelligence},

title={Surrogate-assisted Multiobjective Neural Architecture Search for Real-time Semantic Segmentation},

year={2022}}

![[IEEE TAI] Surrogate-assisted Multiobjective Neural Architecture Search for Real-time Semantic Segmentation](https://www.emigroup.tech/wp-content/uploads/2022/10/新建项目-1-840x420.png)