Competition News and Updates

June/26/2023: This competition is over, you can see the details of the competition on our Github. The ranking of the participating teams is as follows:

🥇 CMOSMA_NCHU

Authors: Chao He, Ming Li, Congxuan Zhang, Hao Chen, Lilin Jie, Leqi Jiang, Junhua Li

Affiliations: Nanchang Hangkong University, Nanjing University of Aeronautics and Astronautics

Description: A Two Population Evolutionary Framework for Handling NAS Problems

🥈 EABSM-NAS

Authors: Chixin Wang, Zhe Wen, Jiajun Chen, Zhen Cui, Boyi Xiao, Weiqin Ying*, Yu Wu*

Affiliations: South China University of Technology, Guangzhou University

Description: Evolutionary Algorithm Based on Surrogate Models in Neural Architecture Search

🥉 DLEA-Niche

Authors: Gui Li, Guining Zhan

Affiliations: Huazhong University of Science and Technology

Description: Dynamic Learning Evolutionary Algorithm with Niche-based Diversity Maintenance Strategy

4. Regional NSGAII

Authors: Xujia Zhang

Affiliations: Southern University of science and Technology

Description: Regional NSGAII

5. NSGA2-DER

Authors: Pengcheng Jiang, Chenchen Zhu

Affiliations: Nanjing University of Information Science and Technology

Description: Improved Evolutionary Operators Based on Different Encoding Regions

May/1/2023: Due to the update of the EvoXBench during the competition and the impact of International Workers’Day, some participants reported that there was not enough time to submit before the deadline. After discussion by the competition organizers, it was decided to extend the deadline for this competition to May 14.

Apr/17/2023(Important):EvoXBench has been updated to version 1.0.3. This change fixes the bugs of IN-1KMOP5 and IN-1KMOP6 and NB201 Benchmark. For participants, it is necessary to re-run these test instances to obtain accurate results. To avoid any unforeseen issues, we strongly recommend that all participants and users utilize the latest version of EvoXBench(i.e., pip install evoxbench).

Apr/4/2023:The winner of this competition will receive a $500 award funded by IEEE CIS.

Background

The advancement of neural architecture search (NAS) has facilitated the automation of deep learning network design, leading to improved performance on various challenging computer vision tasks. Recently, the emerging application scenarios of deep learning, such as autonomous driving, have raised higher demands for network architectures considering multiple design criteria, including the number of parameters/weights, number of floating-point operations and inference latency, among others. From an optimization point of view, the NAS tasks involving multiple design criteria are intrinsically multiobjective optimization problems (MOPs). Hence, it is reasonable to adopt evolutionary multiobjective optimization (EMO) algorithms for tackling them.

Due to the black-box nature of NAS, the complex properties from the optimization point of view (e.g., discrete decision variables, multimodal and noisy fitness landscapes, expensive and many objectives, etc.) pose great challenge to EMO algorithms. Additionally, the progress of adopting EMO algorithms for NAS still lags well behind the progress of the general field of NAS research. Consequently, the main goal of this competition is promoting the research of EMO algorithms on NAS tasks.

Task

In the Multiobjective Neural Architecture Search Competition, participants should use an EMO algorithm designed by themselves to solve the given NAS tasks. The algorithms used can be brand-new or adapted from previously proposed algorithms, but must be relevant to the field of evolutionary computation. We provide an end-to-end pipeline, dubbed EvoXBench, to generate benchmark test suites for EMO algorithms to run efficiently without the requirement of GPUs or Pytorch/Tensorflow. Specifically, EvoXBench covers seven search spaces (NAS-Bench-101, NAS-Bench-201, NATS-Bench, DARTS, ResNet50, Transformer, MNV3), two types of architectures (convolutional neural networks and vision Transformers), two widely-studied datasets (CIFAR-10 and ImageNet), six types of hardware (GPU, mobile phone, FPGA, among others.), and up to six types of optimization objectives (prediction error, FLOPs, latency, energy consumption, among others.). There are two representative benchmark test suites in EvoXBench, i.e., the C-10/MOP and the IN-1K/MOP.

1. C-10/MOP Test Suite

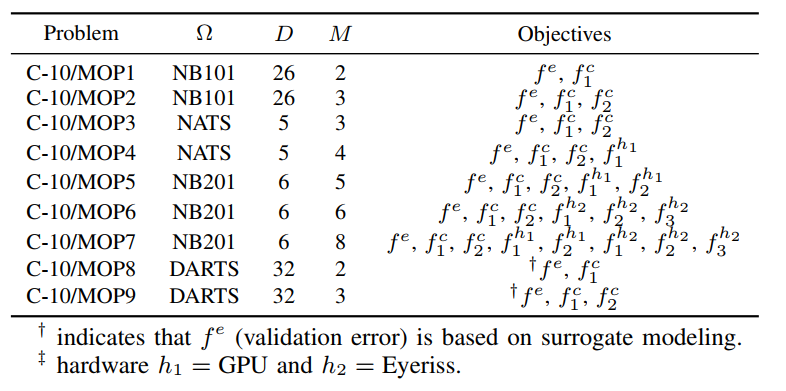

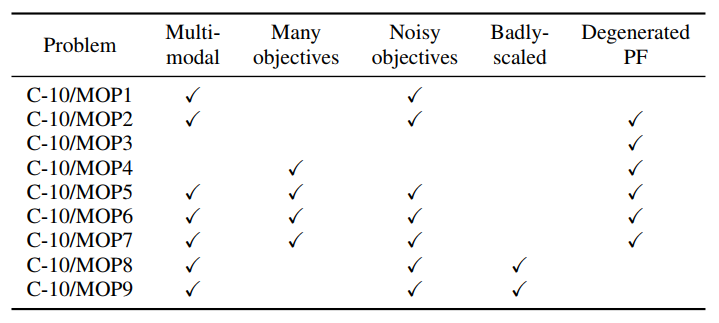

There are nine instances in C-10/MOP tailored for image classification on CIFAR-10 (10-class image classification dataset). As summarized in Table I, the number of objectives of test instances is from two to eight, including \( f^e \) (the prediction error of the model), \( f^c \) (the performance related to model complexity, i.e., weights and FLOPs) and \( f^{\mathcal {H}} \) (the performance related to hardware devices, i.e., hardware latency, hardware energy consumption and Arithmetic Intensity). The property of C-10/MOP is summarized in Table II.

TABLE I: Definition of the proposed C-10/MOP test suite.

TABLE II: Property of test instances in C-10/MOP.

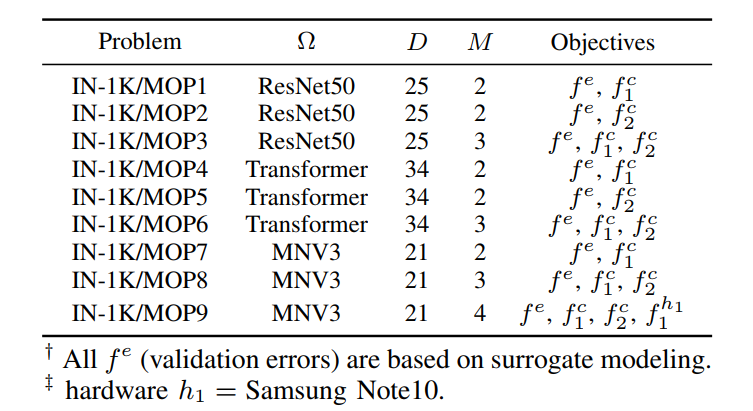

2. IN-1K/MOP Test Suite

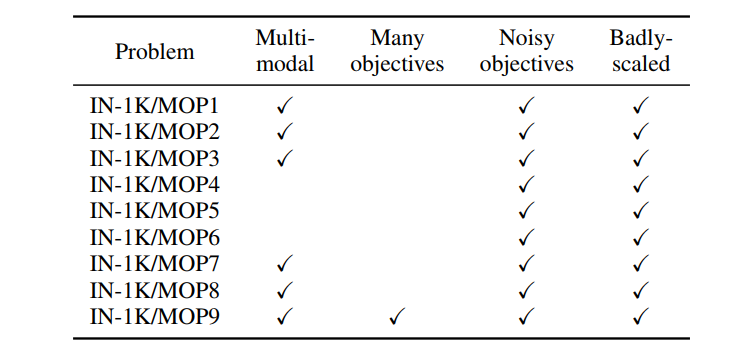

There are also nine instances in IN-1K/MOP tailored for image classification on ImageNet 1K (1000-class image classification dataset). The definition and the property of IN-1K/MOP are summarized in Table III and Table IV. Since the search spaces of ResNet50 and Transformer are resource-intensive and thus unsuitable for efficient hardware deployment, we do not consider hardware-related objectives as architectures from them. Only IN-1K/MOP9 adds a hardware-related objective function, i.e., the latency on a mobile phone.

TABLE III: Definition of the proposed IN-1K/MOP test suite.

TABLE IV: Property of test instances in IN-1K/MOP.

3. Parameter settings

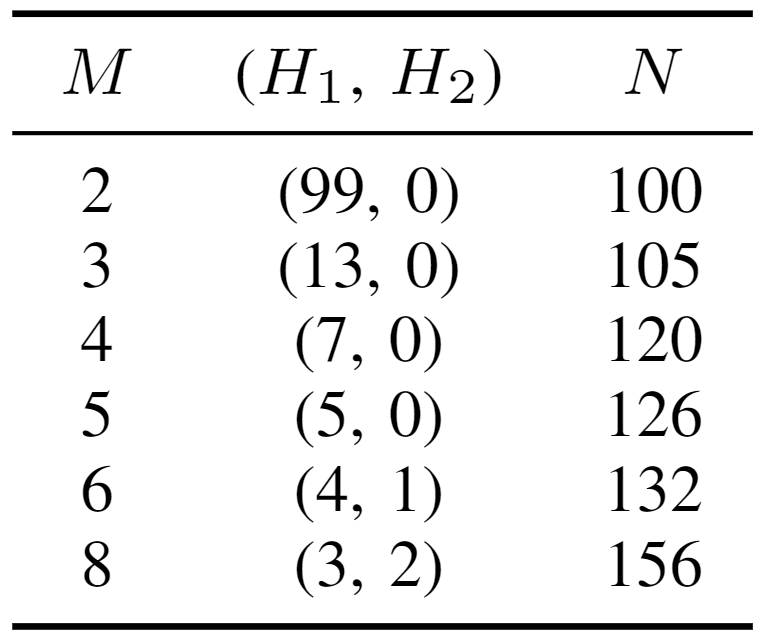

As shown in Table V, the population size is set in correspondence with the number objectives. The algorithm used required 31 independent runs using 10,000 fitness evaluations.

TABLE V: Parameter settings.

Tutorials

1. To better understand EvoXBench and the formulas of NAS problems, you can browse this paper [1] and watch the tutorial video.

Tutorial materials can be downloaded from: tutorial_materials.zip

2. Install EvoXBench. The installation tutorial refers to the project site of EvoXBench.

3. Run the example code, you can familiarize yourself with how to use EvoXBench. [Python] [Mathlab] [Java]

4. Develop your own EMO algorithm and calculate the indicators with the provided performance indicator tool to check the performance of the algorithm.

For MATLAB users, we recommend using the PlatEMO platform to develop algorithms. The benchmark test suites in EvoXBench have been embedded in PlatEMO, you only need to read the examples to know how to use it. Before running PlatEMO, you need to run “evoxbenchrpc” in the terminal. Then run the MATLAB code of your algorithm.

Evaluation Criteria

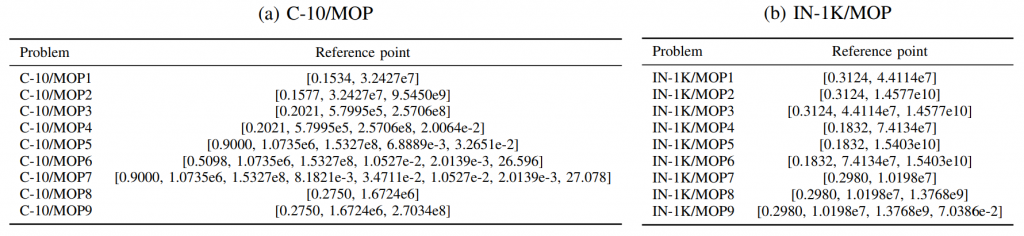

In this competition, we use Hypervolume (HV) and Inversion Generation Distance (IGD) as performance metrics. IGD is only used to measure the performance of C-10/MOP1 – C-10/MOP7 since the true Pareto front (PF) is available. For problems derived on the basis of surrogate model (i.e., C-10/MOP8 – C-10/MOP9 and IN-1K/MOP1 – IN-1K/MOP9), the true Pareto Front (PF) are unknown and we cannot calculate IGD. HV is used to measure performance of all test instances. And the settings of reference points for calculating HV metric are provided in Table VI.

All submitted results will be used to calculate HV and IGD in python on the same computer.

TABLE VI: The reference points used on (a) C-10/MOP and (b) IN-1K/MOP test suites.

Submission

Please submit the compressed folder (a .zip file) of your algorithm and send it to zhenyuliang97@gmail.com no later than April 30, 2023 May 14, 2023.

The .zip file should contain a report, algorithm source code and 18 result files.

The report should contain the following information: (i) title; (ii) names, affiliations, and emails of participants; (iii) description of the algorithm; (iv) mean and standard deviation of HV and IGD values from 31 runs per test instance. Note that only C-10/MOP1 – C-10/MOP7 are considered for the IGD metric, whose true PFs are known from exhaustive evaluations.

The result of each instance should be saved in json format (i.e.,18 .json file). The list of dictionaries is stored in the .json file.

The structure of the .json file is as follows:

[{“run”:1, “X”: [[0, 1, 2, …], [0, 0, 1, …], …, [1, 1, 1, …]], “F”: [[0.19, 39606472], [0.24, 7247656], …, [0.23, 7531312]], “IGD”: 0.01, “HV”: 0.9},

{“run”: 2, “X”: [[0, 1, 2, …], [0, 0, 1, …], …, [1, 1, 1, …]], “F”: [[0.19, 39606472], [0.24, 7247656], …, [0.23, 7531312]], “IGD”: 0.01, “HV”: 0.9},

…,

{“run”: 31, “X”: [[0, 1, 2, …], [0, 0, 1, …], …, [1, 1, 1, …]], “F”: [[0.19, 39606472], [0.24, 7247656], …, [0.23, 7531312]]}, “IGD”: 0.01, “HV”: 0.9}]

where “run” is the sequence number of each run test instance; “X” denotes an encoded network architecture (i.e., decision vector); “F” is the objective function on validation mode; “IGD” and “HV” are performance metric. It is not necessary to provide metric values in the .json file, but we recommend that the submitted files include this information.

Awards

IEEE CEC 2023 conference certificates and prize money will be awarded to the winner of this competition.

Questions

If you have any question to the competition , please contact Zhenyu Liang(zhenyuliang97@gmail.com)or join the QQ group to ask questions (Group number: 297969717).

Organizers

Name: Zhenyu Liang

Affiliation: Department of Computer Science and Engineering, Southern University of Science and Technology, Shenzhen, China. (Email: zhenyuliang97@gmail.com)

Short Bio: Zhenyu Liang received the B.Sc. from Southern University of Science and Technology in 2020. He is currently a master student in the Department of Computer Science and Engineering, Southern University of Science and Technology. His main research interests are evolutionary algorithms, evolutionary multi- and many-objective optimization.

Name: Zhichao Lu

Affiliation: School of Software Engineering, Sun Yat-sen University, China. (Email: luzhichaocn@gmail.com)

Short Bio: Zhichao Lu is currently an Assistant Professor in the School of Software Engineering at Sun Yat-sen University, China. He received his Ph.D. degree in Electrical and Computer Engineering from Michigan State University, USA, in 2020.

His current research focuses on the intersections of evolutionary computation, learning, and optimization, notably on developing efficient, reliable, and automated machine learning algorithms and systems. He received the GECCO-2019 best paper award.

Name: Ran Cheng

Affiliation: Department of Computer Science and Engineering, Southern University of Science and Technology, Shenzhen, China. (Email: ranchengcn@gmail.com)

Short Bio: Ran Cheng is currently an Associate Professor with the Southern University of Science and Technology (SUSTech), China. He received the PhD degree in computer science from the University of Surrey, UK, in 2016.

Dr Ran Cheng serves as an Associated Editor/Editorial Board Member for several journals, including: IEEE Transactions on Evolutionary Computation, IEEE Transactions on Cognitive and Developmental Systems, IEEE Transactions on Artificial Intelligence, IEEE Transactions on Emerging Topics in Computational Intelligence, etc. He is the recipient of the IEEE Transactions on Evolutionary Computation Outstanding Paper Awards (2018, 2021), the IEEE CIS Outstanding PhD Dissertation Award (2019), the IEEE Computational Intelligence Magazine Outstanding Paper Award (2020). Dr Ran Cheng is the Founding Chair of IEEE Computational Intelligence Society (CIS) Shenzhen Chapter. He is a Senior Member of IEEE.

Name: Yaochu Jin

Affiliation: Faculty of Technology, Bielefeld University, Germany. (Email: yaochu.jin@uni-bielefeld.de)

Short Bio: Yaochu Jin is an Alexander von Humboldt Professor for Artificial Intelligence endowed by the German Federal Ministry of Education and Research, Chair of Nature Inspired Computing and Engineering, Faculty of Technology, Bielefeld University, Germany. He is also a Distinguished Chair, Professor in Computational Intelligence, Department of Computer Science, University of Surrey, Guildford, U.K. He was a “Finland Distinguished Professor” of University of Jyväskylä, Finland, “Changjiang Distinguished Visiting Professor”, Northeastern University, China, and “Distinguished Visiting Scholar”, University of Technology Sydney, Australia. His main research interests include evolutionary optimization, evolutionary learning, trustworthy machine learning, and evolutionary developmental systems.

Prof Jin is presently the President-Elect of the IEEE Computational Intelligence Society, and the Editor-in-Chief of Complex & Intelligent Systems. He was named by the Web of Science as “a Highly Cited Researcher” from 2019 to 2022 consecutively. He is a Member of Academia Europaea and Fellow of IEEE.

Acknowledgements

This work was supported by the Program for Guangdong Introducing Innovative and Entrepreneurial Teams(Grant No. 2017ZT07X386).

References

[1] Z. Lu, R. Cheng, Y. Jin, K. C. Tan and K. Deb, “Neural Architecture Search as Multiobjective Optimization Benchmarks: Problem Formulation and Performance Assessment,” in IEEE Transactions on Evolutionary Computation, doi: 10.1109/TEVC.2022.3233364.